Expanding what can be covered by automated rules engines.

For a while now Machine Learning has been a buzz in the Accessibility industry. Now a platform that utilizes Machine Learning to make real progress is here.

By building Machine Learning into our rules engines Deque is able to break coverage barriers that otherwise seem impassable. In particular it becomes possible to write rules for Custom built controls. Rules are no longer limited to Stock controls and their properties. We can now infer meaning from visual aspects of controls and assert things about the way they should behave.

More simply, we utilize Machine learning to be the “eyes” of an Accessibility Expert feeding our rules engine visually distinguishable types as detected by our Image Recognition Machine Learning model. We then combine this with our knowledge of what these properties should mean for the Accessibility of the Platform. Our rules run and ensure that the properties sent to Assistive Technologies match those that would be expected visually.

This is all pretty complicated, let’s dig a little deeper.

Traditional Rules Engines

In order to understand the value that machine learning brings to Accessibility Rules it is first important to understand how your typical rules engine works. Let’s examine a simplistic example of a rule running on Android.

A Basic Switch in a Linear Layout

<LinearLayout> <TextView>The Lights</TextView> <Switch>On</Switch> </LinearLayout>

From this, simplified, bit of View Hierarchy we can see a simple Accessibility Issue. The Switch does not appear to be properly Associated with the TextView and we can recommend a fix. Here is how one might fix this issue.

<LinearLayout> <TextView labelFor=switchId>The Lights</TextView> <Switch id=switchId>On</Switch> </LinearLayout>

This is all great. This is a very simple Accessibility Issue to find and the solution is consistent. A traditional rules engine can find such issues very easily.

However, this style of rules is somewhat limited. Notice in this example ALL we have done is look at View Tree information. Which works GREAT when you’re utilizing Stock android Controls. Stock Controls have expectations that must be met to be accessible.

A Custom Button Class

But, what if your app is full of Custom Controls? Let’s consider a different example. A simple +1 button.

<LinearLayout> <MyCustomImageButton></MyCustomImageButton> </LinearLayout>

Notice that the text is embedded within the image. This is a scenario that traditional rules engines would struggle with. A traditional rules engine can neither classify this a Button or an Image. The ClassName doesn’t match either type of Control.

The appropriate markup for this is obviously

<MyCustomImageButton contentDescription=”+1”></MyCustomImageButton>

but there is no way for a traditional rules engine to know this!

Enter Machine Learning

This is where the power for Machine Learning comes in.

Here we can see that Machine Learning has identified this Control as a Button.

<LinearLayout> <MyCustomImageButton></MyCustomImageButton> -> Should be Button </LinearLayout>

Now, knowing that MyCustomImageButton should be Button it is possible for us to assert that this Control should have a ContentDescription and thereby recommend the correct fix, among other fixes to custom Button controls:

<MyCustomImageButton contentDescription=”+1”></MyCustomImageButton>

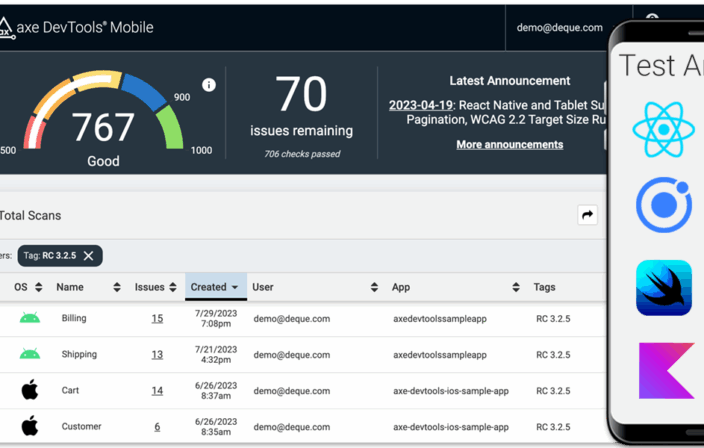

Announcing Machine Learning Rules

Deque has officially released its first Machine Learning Rule for the Android platform. The new rule is available to current customers who upload their results to our Mobile server. You will see a new category of violation when you view the details of an Android scan. In addition to viewing rules tested with our axe rules, there is a new list, called Machine Learning, that shows violations found with our new capability. Documentation for existing customers is available here behind login. If you are not a current customer, please contact us to learn more.

This is just the start. This approach to Rule creation should allow us to add at least 50% more rules over the coming releases and continue to push the boundaries of Accessibility Issues that can be detected automatically well past competing products.